Recently I posted

Matching “.” in UTF-8, in which I claimed that you could match

the regular-expression “.” in a UTF-8 stream with either four or five states in a byte-driven finite automaton,

depending how

you define the problem. That statement was arguably wrong, and you might need three more states, for a total of eight. But you

can make a case that really, only four should be needed, and another case calling for quite a few more.

Because that phrase “depending how you define the problem” is doing a lot of work.

But first, thanks: Ed Davies, whose blog contributions (1, 2, 3) were getting insufficient attention from me until Daphne Preston-Kendal insisted I look more closely.

To summarize Ed’s argument: There are a bunch of byte combinations that look (and work) like regular UTF-8 but are explicitly ruled out by the Unicode spec, in particular Section 3.9.3 and its Table 3.7.

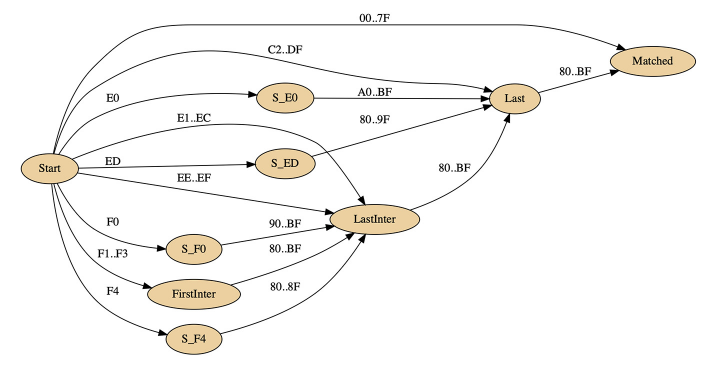

Moar States! · Ed posted a nice picture of a corrected 8-state automaton that will fail to match any of these forbidden sequences. ¶

(Original SVG here.)

I looked closely at Ed’s proposal and it made sense, so I implemented it and (more important) wrote a bunch of unit tests exploring the code space, and it indeed seems to accept/reject everything correctly per Unicode 3.9.3.

So, argument over, and I should go forward with the 8-state Davies automaton, right? Why am I feeling nervous and grumpy, then?

Not all Unicode · I’ve already mentioned in this series that your protocols and data structures just gotta support Unicode in the 21st century, but you almost certainly don’t want to support all the Unicode characters, where by “character” I mean, well… if you care at all about this stuff, please go read Unicode Character Repertoire Subsets (“Unichars for short), a draft inching its way through the IETF, with luck an RFC some day. And if you really care, dig into RFC 3454: PRECIS Framework: Preparation, Enforcement, and Comparison of Internationalized Strings in Application Protocols. Get a coffee first, PRECIS has multiple walls of text and isn’t simple at all. But it goes to tremendous lengths to address security issues and other best practices. ¶

If you don’t have the strength, take my word for it that the following things are true:

We don’t talk much about abstract characters; instead focus on the numeric “code points” that represent them.

JSON, for historical reasons, accepts all the code points.

There are several types of code points that don’t represent characters: “Surrogates”, “controls”, and “noncharacters”.

There are plenty of code points that are problematic because they can be used by phishers and other attackers to fool their victims because they look like other characters.

There are characters that you shouldn’t use because they represent one or another of the temporary historical hacks used in the process of migrating from previous encoding schemes to Unicode.

The consequence of all this is that there are many subsets of Unicode that you might want to restrict users of your protocols or data structures to:

JSON characters: That is to say, all of them, including all the bad stuff.

Unichars “Scalars”: Everything except the surrogates.

Unichars “XML characters”: Lots but not all of the problematic code points excluded.

Unichars “Unicode Assignables”: “All code points that are currently assigned, excluding legacy control codes, or that might in future be assigned.”

PRECIS “IdentifierClass”: “Strings that can be used to refer to, include, or communicate protocol strings like usernames, filenames, data feed identifiers, and chatroom name.”

PRECIS “FreeformClass”: “Strings that can be used in a free-form way, e.g., as a password in an authentication exchange or a nickname in a chatroom.”

Some variation where you don’t accept any unassigned code points; risky, because that changes with every Unicode release.

(I acknowledge that I am unreasonably fond of numbered lists, which is probably an admission that I should try harder to compose smoothly-flowing linear arguments that don’t need numbers.)

You’ll notice that I didn’t provide links for any of those entries. That’s because you really shouldn’t pick one without reading the underlying document describing why it exists.

What should you accept? · I dunno. None of the above are crazy. I’m kind of fond of Unicode Assignables, which I co-invented. The only thing I’m sure of is that you should not go with JSON Characters, because of the fact that its rules make the following chthonic horror perfectly legal: ¶

{"example": "\u0000\u0089\uDEAD\uD9BF\uDFFF"}

Unichars describes it:

The value of the “example” field contains the C0 control NUL, the C1 control "CHARACTER TABULATION WITH JUSTIFICATION", an unpaired surrogate, and the noncharacter U+7FFFF encoded per JSON rules as two escaped UTF-16 surrogate code points. It is unlikely to be useful as the value of a text field. That value cannot be serialized into well-formed UTF-8, but the behavior of libraries asked to parse the sample is unpredictable; some will silently parse this and generate an ill-formed UTF-8 string.

No, really.

What is Quamina for? · If you’re wondering what a “Quamina” is, you probably stumbled into this post through some link and, well, there’s a lot of history. Tl;dr: Quamina is a pattern-matching library in Go with an unusual (and fast) performance envelope; it can match thousands of Patterns to millions of JSON blobs per second. For much, much more, peruse the Quamina Diary series on this blog. ¶

Anyhow, all this work in being correctly restrictive as to the shape of the incoming UTF-8 was making me uncomfortable. Quamina is about telling you what byte patterns are in your incoming data, not enforcing rules about what should be there.

And it dawned on me that it might be useful to ask Quamina to look at a few hundred thousand inputs per second and tell you which had ill-formed-data problems. Quamina’s dumb-but-fast byte-driven finite automaton would be happy to do that, and very efficiently too.

Conclusion · So, having literally lain awake at night fretting over this, here’s what I think I’m going to do: ¶

I’ll implement a new Quamina pattern called

ill-formedor some such that will match any field that has busted UTF-8 of the kind we’ve been talking about here. It’d rely on an automaton that is basically the inverse of Davies’ state machine.By default, the meaning of “

.” will be “matches the Davies automaton”; it’ll match well-formed UTF-8 matching all code points except surrogates.I’ll figure out how to parameterize regular-expression matches so you can change the definition of “

.” to match one or more of the smaller subsets like those in the list above from Unichars and PRECIS.

But who knows, maybe I’ll end up changing my mind again. I already have, multiple times. Granted that implementing regular

expressions is hard, you’d think that matching “.” would be the easy part. Ha ha ha.

Comment feed for ongoing:

From: Robert Sayre (Dec 30 2024, at 13:10)

Had to look up "chthonic", but it's apt. I still think Bjoern has beaten this problem to a pulp.

https://bjoern.hoehrmann.de/utf-8/decoder/dfa/#design

[link]