“Meet Your New Assistant” says the announcement, going on with “Built With Llama 3”. And oh my goodness has it ever got a lot of coverage. So I thought I might as well try it.

My first cut was a little unfair; I asked it about a subject on which I am unchallenged as the world’s leading expert: Tim Bray. (That’s probably overstating it: My wife is clearly in the running.)

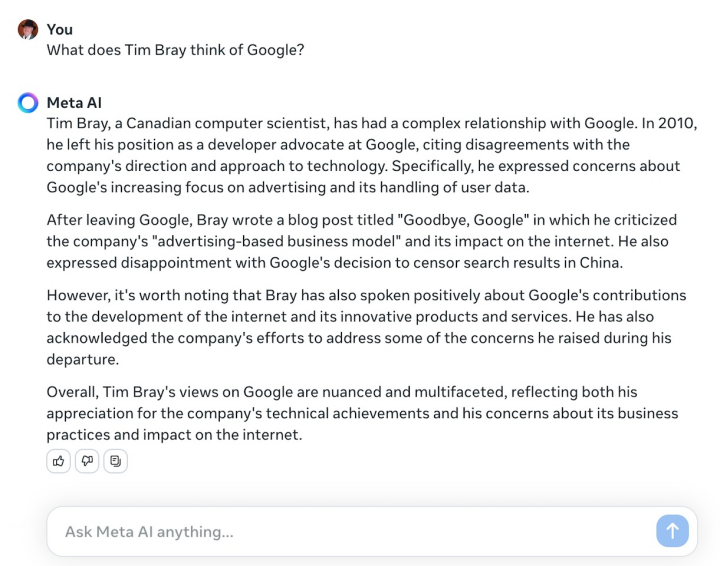

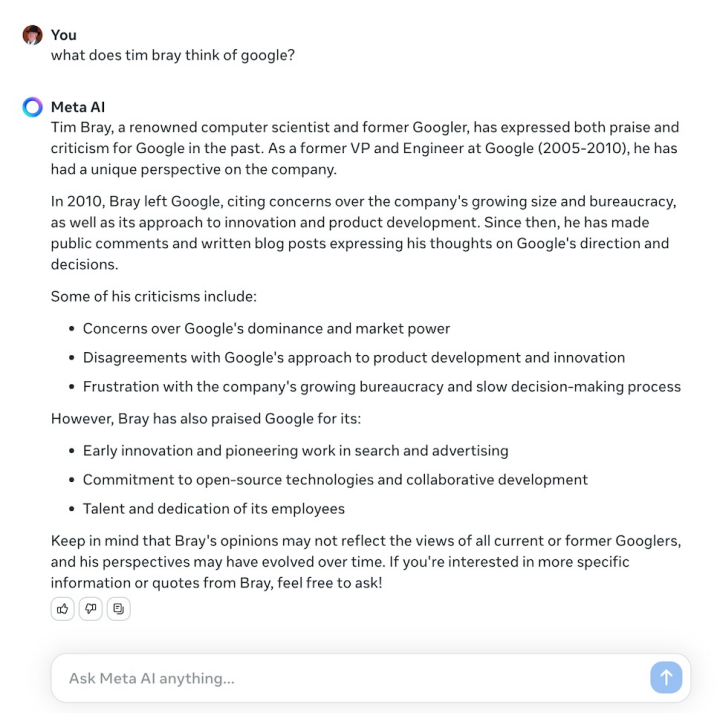

So I asked meta.ai “What does Tim Bray think of Google?” Twice; once on my phone while first exploring the idea, and again later on my computer. Before I go on, I should remark that both user interfaces are first-rate: Friction-free and ahead of the play-with-AI crowd. Anyhow, here are both answers; it may be relevant that I was logged into my long-lived Facebook account:

· · ·

The problem isn’t that these answers are really, really wrong (which they are). The problem is that they are terrifyingly plausible, and presented in a tone of serene confidence. For clarity:

I am not a Computer Scientist. Words mean things.

I worked for Google between March of 2010 and March of 2014.

I was never a VP there nor did I ever have “Engineer” in my title.

I did not write a blog post entitled “Goodbye, Google”. My exit post, Leaving Google, did not discuss advertising nor Google’s activities in China, nor in fact was it critical of anything about Google except for its choice of headquarters location. In fact, my disillusionment with Google (to be honest, with Big Tech generally) was slow to set in and really didn’t reach critical mass until these troubling Twenties.

The phrase “advertising-based business model”, presented in quotes, does not appear in this blog. Quotation marks have meaning.

My views are not, nor have they been, “complex and multifaceted”. I am embarrassingly mainstream. I shared the mainstream enchantment with the glamor of Big Tech until, sometime around 2020, I started sharing the mainstream disgruntlement.

I can neither recall nor find instances of me criticizing Google’s decision-making process, nor praising its Open-Source activities.

What troubles me is that all of the actions and opinions attributed to meta.ai’s version of Tim Bray are things that I might well have done or said. But I didn’t.

This is not a criticism of Meta; their claims about the size and sophistication of their Llama3 model seem believable and, as I said, the interface is nifty.

Is it fair for me to criticize this particular product offering based on a single example? Well, first impressions are important. But for what it’s worth, I peppered it with a bunch of other general questions and the pattern repeats: Plausible narratives containing egregious factual errors.

I guess there’s no new news here; we already knew that LLMs are good at generating plausible-sounding narratives which are wrong. It comes back to what I discussed under the heading of “Meaning”. Still waiting for progress.

The nice thing about science is that it routinely features “error bars” on its graphs, showing both the finding and the degree of confidence in its accuracy.

AI/ML products in general don’t have them.

I don’t see how it’s sane or safe to rely on a technology that doesn’t have error bars.

Comment feed for ongoing:

From: Jacek Kopecky (Apr 19 2024, at 09:40)

Wow, oh my indeed!

[link]

From: Daniel Harka (Apr 19 2024, at 10:52)

Yeah. It still has a long way to go.

[link]

From: Pete Forman (Apr 19 2024, at 12:50)

+1 for error bars. Most people seem unaware of the empirical basis of science leading to such laughable notions as "scientifically impossible". It would be nice to think that LLMs will start to tag their results with uncertainty but I fear that if they do those will be as synthetic as the results themselves.

[link]

From: Nathan (Apr 19 2024, at 13:50)

These LLM are designed to produce plausible sounding text. The problem is they are always presented as if they produce meaning or accurate text. Those are two entirely different things.

[link]

From: Len Bullard (Apr 23 2024, at 07:19)

Did the same test a few weeks ago with ChatGPT and Gemini. The results were hilarious from my point of view. They do reinforce my opinions on the difference between fame (what people say about people) and notability (what is documented about one has accomplished). It also revealed glaring differences in the training data given who controls or has access to resources. My conclusion is similar to yours: it’s not a lot better than party line gossip with regards to people. It’s pretty good if researching well vetted topics.

Experiments with video and music generators are surprising in terms of the phenomenal progress given any mainstream homogenous style. The video is still clunky but some of these can spin a good tale in short forms. Consistency in long forms still needs work. As for music, the output for a style that is mainstream, say cloning Taylor Swift or Johnny Cash is unbelievably good. Folks in these markets have reasons to panic. Now is a good time to start celebrity cults because fidelity of fans is their only defense. As a side note the best of these systems brought in human experts, say music producers, to help train the models. It is eerily like people about to be layed off being required to train their replacements.

Note that the music industry machine has been cloning its talent for decades chasing fame and proclaimed notability in terms of Grammy awards. It is a corrupt and corrupting process that homogenizes art. That it is being undercut by machines that do precisely that is a delicious spy vs spy karma. We may be at a sea change where an artist really is better off being an Indy who sells physical products, has fewer but faithful fans, and creates quirky music below the Sauron eye of AI. I’d rather be Kate Bush than Taylor Swift money be dammed.

[link]

From: Ed Schechter (Apr 23 2024, at 19:22)

ChatGPT answer to same question:

Doesn't make as many demonstrably wrong historical claims (just Amazon 2020).

Is this factually correct?

-----------------

Tim Bray has been vocal about his views on Google, particularly highlighting concerns about the company's business practices and impact on the internet. In his blog and public statements, he has criticized Google for issues related to privacy, market dominance, and the way it handles competition. Bray has also expressed concerns about Google's influence over web standards and the potential for it to shape the internet ecosystem in ways that could stifle innovation and competition.

Additionally, Bray's departure from Amazon in 2020 over its treatment of warehouse workers and whistleblowers also underscored his stance on corporate responsibility and ethics, which aligns with his critical views on large tech companies like Google. His criticisms often focus on the broader implications of how tech giants operate and their effects on society and the tech industry.

[link]

From: Gavin B. (Apr 29 2024, at 06:43)

Not Meta.ai but openAI

[C]omplaint against OpenAI, alleging that the ChatGPT service violates GDPR rules since its information cannot be corrected if found inaccurate.

https://www.theregister.com/2024/04/29/openai_hit_by_gdpr_complaint/

[link]