If you need to handle really a lot of traffic, there’s only one way to do it: sharding. Which is to say, splitting up the incoming requests among as many hosts (or Lambda functions, or message brokers, or data streams) as you need. Once you get this working you can handle an essentially unlimited request volume. Of course, you have to make choices on how you’re going to divide up the traffic among the shards. I’ve had intense exposure to the options since I came to work at AWS.

Random spray · This is the simplest thing imaginable. For each message, you make a UUID and use it as the Partition Key (if your downstream uses that for sharding), otherwise just pick a target shard using your favorite random number generator.

There’s a lot to like about it. Your front-end can be dumb and stateless and fast. The load will get dealt out evenly among the shards. Spoiler alert: I think this is a good choice and you should do it if you can possibly can.

A common variation on this theme involves auto-scaling. For example, if you have a fleet of hosts processing messages from an SQS queue, auto-scaling the fleet size based on the queue depth is a fairly common practice. Once again, admirably simple.

“Smart” sharding · The idea is that you do extra work to avoid problems, for example some shards getting overloaded and others being idle. Another kind of problem is one of your upstream sources sending “poison pill” messages that cause the receiving shard to lock up or otherwise misbehave

Load-sensitivity is one “smart” approach. The idea is that you keep track of the load on each shard, and selectively route traffic to the lightly-loaded ones and away from the busy ones. Simplest thing is, if you have some sort of load metric, always pick the shard with the lowest value.

I’ve seen this done, and work OK. But it isn’t that easy. You have to figure out a meaningful load metric (queue depth? how much traffic received recently? CPU load?) and communicate that from each shard to the front end.

If poison pills are your worry — this is not rare at all — the smartest approach is usually shuffle sharding. See Colm MacCárthaigh’s awesome thread on the subject, which you should go read if you haven’t already. He has pretty pictures (see below for one I stole) and math too!

Affinity · Also known as “session affinity”. Another term you sometimes hear is “sticky sessions”; for a discussion see this article over at Red Hat. The idea is that you route all the events from the same upstream source to the same shard, by using an account number or session identifier of some sort as a partition key.

Affinity is attractive: If all the clickstream clicks from the same user or state-change events from the same workflow or whatever go to the same host, you can hold the relevant state in that host’s memory, which means you can respond faster and hit your database less hard.

Ladies, enbies, gentlemen, and others: I’ve had really lousy luck with affinity in sharding. Lots of things can go wrong. The most obvious: When traffic isn’t evenly distributed among upstream sources.

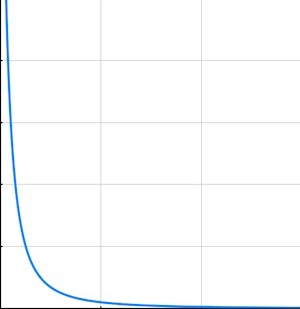

One time I was working with a stream of AWS customer events, and I thought it’d be smart to deal them out to Kinesis shards by account number. That was bad — the messages per second per account rate was distributed across account numbers in a classic inverse-square curve like the one in the margin. To make this concrete, in one of the early tests I noticed that the top 10 account numbers accounted for half the traffic. Ouch. (I don’t think this was a rare situation, I think it’s probably common as dirt.)

The general lesson is that if you unfortunately send too many “hot” upstream sources to the same shard, it’s easy to overload it. The worst case of this is when you get a single “whale” customer whose traffic is too much for any single shard.

So in this situation I’m talking about, we switched to random-spray, and the whole system settled down and ran nice and smooth. Except for, processing each message required consulting a database keyed by account number and the database bills got kind of high.

So I got what I thought was a brilliant idea, hid in a corner, and wrote a “best-effort affinity” library that tried to cluster requests for each customer on as few shards as possible. It seemed to work and our database bills went down by a factor of six and I felt smart.

Since then, it’s turned into a nightmare. There’s all sorts of special-case code to deal with extra-big whales and sudden surges and other corner cases we didn’t think of. Now people roll their eyes when smoke starts coming out of the service and mutter “that affinity thing”. They’re usually too polite to say “Tim’s dumb affinity code”.

That’s not all, folks · Here’s another way things can go wrong: What happens when you have session affinity, and you have per-session state built up in some host, and then that host goes down? (Not necessarily a crash, maybe you just have to patch the software.)

Now, because you’re a good designer, you’ve been writing all your updates to a journal of some sort, so when your shard goes pear-shaped, you find another host for it and replay the journal and you’re back in business.

To accomplish this you need to:

Reliably detect when a node fails. As opposed to being stuck in a long GC cycle, or waiting for a slow dependency.

Find a new host to place the shard on.

Find the right journal and replay it to reconstruct all the lost state.

Anyone can see this takes work. It can be done. It is being done inside a well-known AWS service I sit near. But the code isn’t simple at all, and on-call people sigh and mutter “shard migration” in exactly the same tone of voice they use for “Tim’s dumb affinity code.”

But the database! · So am I saying that storing state in shards is a no-no, that we should all go back to stateless random spray and pay the cost in time and compute to refresh our state on every damn message?

Well yeah, if you can. That fleet running my dumb affinity code? Turns out when we reduced the database bills by a factor of six we saved (blush) a laughably small amount of money.

What about latency, then? Well, if you were using a partition key for sharding, you can probably use it for retrieving state from a key/value store, like DynamoDB or Cassandra or Mongo. When things are set up well, you can expect steady-state retrieval latencies in the single-digit milliseconds. You might be able to use a caching accelerator like DynamoDB’s DAX and do a lot better.

In fact, if the sessions you might have tried to shard on have that inverse-square behavior I was talking about, where a small number of keys cover a high proportion of the traffic, that might actually help the caching work better.

But the cache is a distraction. The performance you’re going to get will depend on your record sizes and update patterns and anyhow you probabl don’t care about the mean or median as much as the P99.

Which is to say, run some tests. You might just find that you’re getting enough performance out of your database that you can random-spray across your shards, have a stateless front-end and auto-scaled back-end and sleep sound at night because your nice simple system pretty well takes care of itself.

Comment feed for ongoing:

From: Chris (Sep 26 2019, at 09:44)

Isn't this just pushing the sharding problem into the database?

[link]

From: John Cowan (Sep 26 2019, at 11:33)

Sounds like the solution to your database bills (if you end up needing one again) is random sharing + Elasticache.

[link]

From: Glenn Martin (Sep 27 2019, at 18:30)

There is a small typo in one of your final thoughts: "anyhow you probabl don’t"...

[link]

From: Mike Rhodes (Oct 05 2019, at 13:11)

Mostly, as noted, this is pushing the sharding down to the database layer. But, there has been a lot of work over the years put into database sharding tech, so the cost is amortised across a lot of use, and you can reap the benefits of that very cheaply these days.

[link]